By Marijana Šarolić Robić*

[Source: Orgalim]

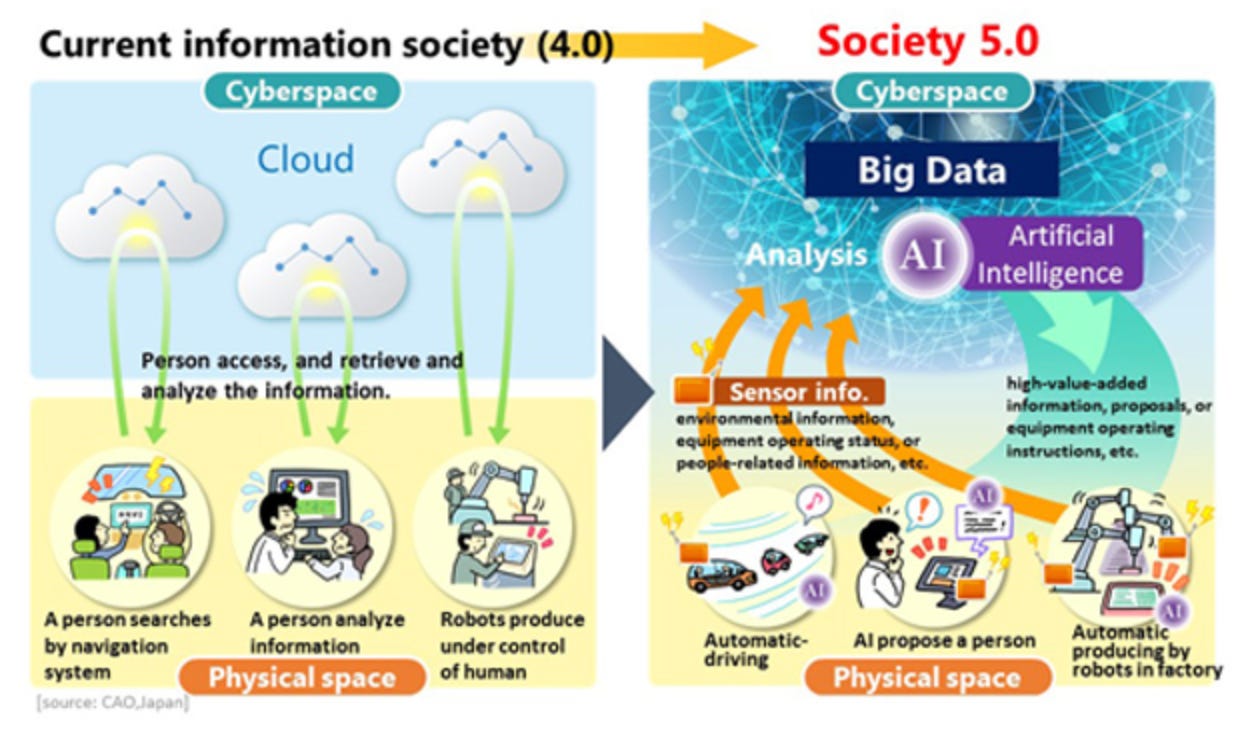

We are all aware of daily data overflow and continuous, almost unperceived, changes driven by technology in the way we live and work. It is becoming extremely difficult and complex to monitor, audit, and implement changes driven by technology in our everyday lives, both personal and professional.

This increased complexity has had a significant impact in the field of artificial intelligence (AI). The Hype Cycle for Emerging Technologies 2021, published by Gartner, points out the continued growth in the AI field. As the authors put it,

For example, generative AI is an emerging technology that the pharmaceutical industry is using to help reduce costs and time in drug discovery. Gartner predicts that by 2025, more than 30% of new drugs and materials will be systematically discovered using generative AI techniques. Generative AI will not only augment and accelerate design in many fields; it also has the potential to “invent” novel designs that humans may have otherwise missed.

We are already using AI daily in different tools and apps, such as social media, e-commerce, digital payment solutions, delivery services, gaming etc. Aware of the momentum, European Union (EU) regulators have been addressing AI use and related challenges since April 2018 and are currently discussing the proposed AI Act, which introduces a risk-based approach and foresees the establishment of a central EU register of high-risk AI systems with permanent compliance requests throughout AI systems life cycles. However, if you ask an average EU citizen about the AI Act, they are unlikely to know about it.

Related issues of accessibility, transparency, and skill sets required by EU citizens to use such technologies also need to be mentioned. Without the involvement of all stakeholders (policy makers, citizens, NGOs, academia, businesses, etc.) working permanently on awareness and education campaigns, the legislative change will bring hardly any change to regular citizens.

In the existing draft of the AI Act, the burden of implementation seems unfairly laid on industry shoulders, i.e., on small and medium enterprises (SMEs) – generally start-ups – bringing their AI-based products and services to markets and consumers. Under the proposed existing draft regulation, SMEs would have to leverage the burden of AI implementation and compete with global market players and companies originating from far less regulated markets. One can only hope that SMEs would be successful in such compliance procedures, but one cannot expect this without the active involvement of the above mentioned stakeholders in public campaigns.

Moreover, companies are not the sole stakeholders in the AI market, let alone sole beneficiaries. Placing responsibility solely on them might result in such companies leaving the EU market area and offering their AI-based products and services to other markets. EU citizens might find themselves in a position where AI-based products and services might not be available to them in EU markets due to overregulation and the inability of SMEs to comply with EU regulatory frameworks. This could lead to a loss of value creation for all stakeholders.

For example, if a start-up in the agricultural industry (e.g., providing an AI-driven solution for early warning systems of weed detection and elimination) is unable to satisfy the required regulatory framework, it could simply leave the EU and provide its services to farmers outside the EU. Similarly, a company providing wellbeing and lifestyle AI software, which helps prevent or even eliminate diseases, such as high pressure or high cholesterol, or provides recommendations on nutrition, exercise, lifestyle, and regular monitoring of user health status, could leave the EU market.

Educational campaigns like the ones initiated during the Finnish EU presidency in 2019, providing free online educational tools on the basics of AI, could make a real difference on tackling accessibility and awareness issues. Such educational campaigns might help to support a more just distribution of AI benefits to average citizens by empowering them to identify such benefits in their private and professional lives (e.g., easier, cheaper, more accessible transfer and access to financing through various fintech solutions, better control and monitoring of different health conditions, etc.). However, an active public campaign and the promotion of a lifelong learning lifestyle is a precondition.

The world of today is becoming more inaccessible and more difficult to understand to people who do not keep up with technological changes. If we continue on this path, the result could be the creation of different citizen classes: technology literate ones and technology illiterate, technology consumers or technology makers. As Christine Lagarde explained in her February 2021 podcast episode for The Economist, we must jointly (re)define the values and principles we hold to be pillars of our civilization and ensure that all stakeholders take part and have access to such process within democratic frameworks.

Only open dialogue and fair distribution of benefit and burden will result in a more fair and just distribution of AI. The initial step must be taken by each of us individually, by constantly learning and updating our knowledge in field of technology, AI included. We must strive to become not only technology users, but also technology makers and ensure a focus on just and fair distributions of AI technology benefits for all stakeholders. It is important to understand and remember that a technical background is not precondition, as AI is already embroidered in many aspects of our life. What we need are experts from all walks of life to jointly work on technology creation, development, implementation, and usage. There is room for everyone in the AI world. Do come and join us!

Share

*Marijana Šarolić Robić is an AI enthusiast who has worked as a lawyer for almost 20 years. Since 2013, Marijana has supported the local start-up community as a mentor and has been an active stakeholder in the creation of the Croatian Artificial Intelligence Association (CROAI). Her field of work is the technology driven economy, with a specialty in shareholder relations, incorporation, and regulatory frameworks. She finished her EMBA in 2015 and has been one of the founders and President of PWMN Croatia/PWN Zagreb, an NGO that promotes gender equity in business environment since 2016.

Due to unforeseen circumstances, today’s scheduled event with the author of this article, Marijana Šarolić Robić, has been postponed until 2022.

Further details will follow.